“Battlefield Map” Reveals Malicious COVID-19 Content Exploits Pathways Between Platforms To Thrive Online

0 View

Share this Video

- Publish Date:

- 15 June, 2021

- Category:

- Covid

- Video License

- Standard License

- Imported From:

- Youtube

Tags

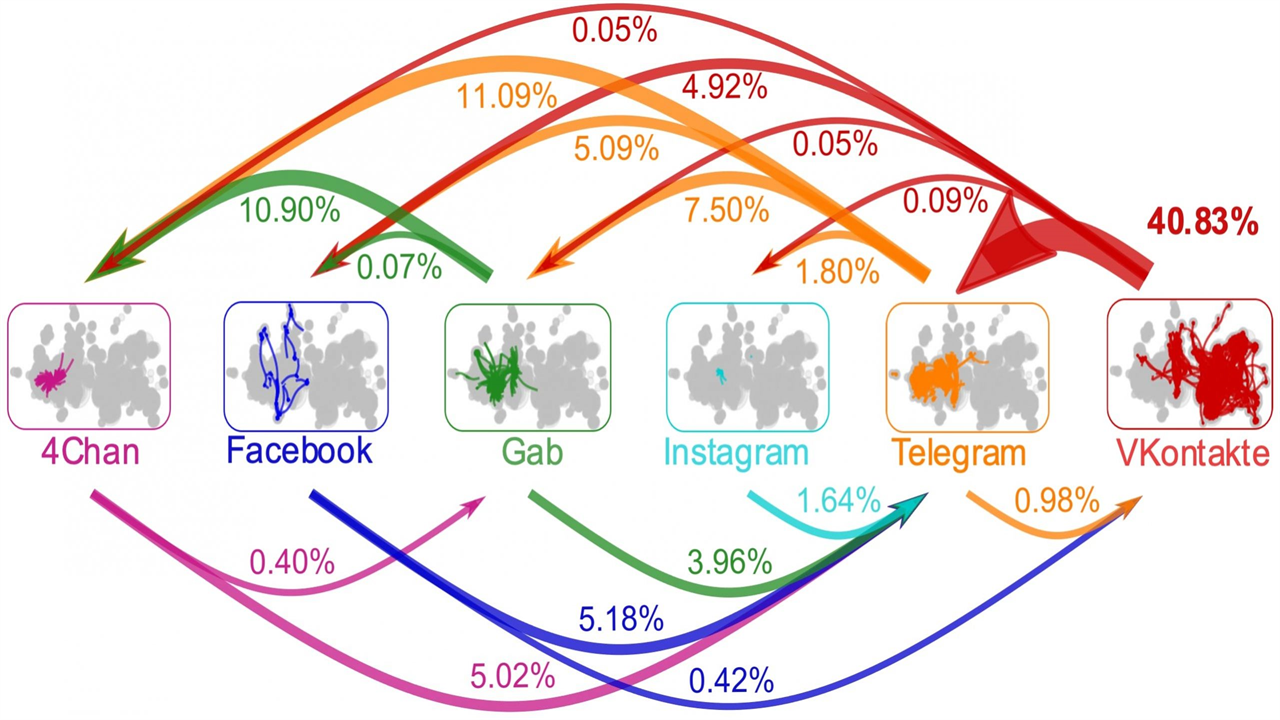

Malicious COVID-19 content (e.g. anti-Asian hate) uses pathways between social media platforms to spread online. Credit: Neil Johnson/GW

New research shows how stopping the spread of malicious content requires cross-platform action.

Malicious COVID-19 online content — including racist content, misinformation and misinformation — thrives and spreads online by evading the moderation efforts of individual social media platforms, according to new research published in the journal Scientific Reports. By mapping online hate clusters across six major social media platforms, researchers at George Washington University show how harmful content leverages cross-platform pathways, highlighting the need for social media companies to change their moderation policies. rethink and adapt content.

Led by Neil Johnson, a professor of physics at GW, the research team sought to understand how and why malicious content thrives online despite significant moderation efforts, and how it can be stopped. The team used a combination of machine learning and network data science to explore how online hate communities honed COVID-19 as a weapon and used current events to attract new followers.

“Until now, slowing the spread of malicious content online has been like playing a whack game because no map of the online hate multiverse existed,” Johnson, who is also a researcher at the GW Institute for Data, Democracy & Politics, said. “You can’t win a battle if you don’t have a map of the battlefield. In our study, we created a unique map of this battlefield. Whether you look at traditional hate topics, such as anti-Semitism or anti-Asian racism surrounding COVID-19, the battlefield map is the same. And it is this map of links within and between platforms that is the missing piece to understanding how we can slow or stop the spread of hate content online.”

The researchers began by mapping how hate clusters interact to spread their stories across social media platforms. Focusing on six platforms – Facebook, VKontakte, Instagram, Gab, Telegram and 4Chan – the team started with one particular cluster of hate and looked outside to find a second cluster strongly linked to the original. They found that the strongest connections were VKontakte to Telegram (40.83% of cross-platform connections), Telegram to 4Chan (11.09%), and Gab to 4Chan (10.90%).

The researchers then turned their attention to identifying malicious content related to COVID-19. They found that the coherence of the COVID-19 discussion grew rapidly in the early stages of the pandemic, with hate clusters forming and cohesive narratives around COVID-19 topics and misinformation. To undermine moderation efforts by social media platforms, groups that sent hate messages used various adaptation strategies to regroup and/or re-enter a platform on other platforms, the researchers found. For example, clusters often change their names to avoid being detected by moderators’ algorithms, such as vaccine in va$$ine. Similarly, anti-Semitic and anti-LGBTQ clusters simply add strings of ones or A’s before their names.

“As the number of independent social media platforms grows, it is very likely that these hate-generating clusters will strengthen and expand their interconnections through new links, and are likely to exploit new platforms that are beyond the reach of the jurisdictions of the US and other Western countries. lie. .” said Johnson. “The chances of all social media platforms worldwide working together to solve this are very slim. However, our mathematical analysis identifies strategies that platforms as a group can use to effectively slow down or block hate content online.”

Based on their findings, the team proposes several ways for social media platforms to slow the spread of malicious content:

Artificially extend the paths malicious content must take between clusters, increasing the chance of being detected by moderators and slowing the spread of time-sensitive material, such as weaponized COVID-19 disinformation and violent content. Determine the size of the support base of an online hate cluster by placing a limit on the size of clusters. Introduce non-malicious, regular content to effectively dilute the focus of a cluster.

“Our study shows a similarity between the spread of online hate and the spread of a virus,” said Yonatan Lupu, associate professor of political science at GW and co-author of the paper. “Individual social media platforms have struggled to contain the spread of online hate, reflecting the difficulty individual countries around the world have had in stopping the spread of the COVID-19 virus.”

Going forward, Johnson and his team are already using their map and its mathematical modeling to analyze other forms of malicious content — including the weaponization of COVID-19 vaccines in which certain countries try to manipulate mainstream sentiment for nationalist gains. They also examine the extent to which individual actors, including foreign governments, can play a more influential or controlling role in this space than others.

Reference: June 15, 2021, Scientific Reports.

DOI: 10.1038/s41598-021-89467